In this blog post I show some experiments with algorithmic recognition of images of handwritten digits.

I followed the algorithm described in Chapter 10 of the book “Matrix Methods in Data Mining and Pattern Recognition” by Lars Elden.

The algorithm described uses the so called thin Singular Value Decomposition (SVD).

- Training phase

1.1. Rasterize each training image into an array of 16 x 16 pixels.

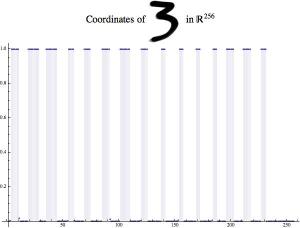

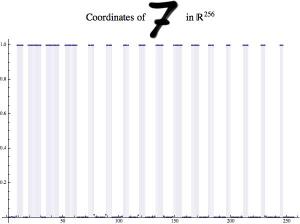

1.2. Each raster image is linearized — the rows are aligned into a one dimensional array. In other words, each raster image is mapped into a R^256 vector space. We will call these one dimensional arrays raster vectors.

1.3. From each set of images corresponding to a digit make a matrix with 256 columns of the corresponding raster vectors.

1.4. Using the matrices in step 1.3 use thin SVD to derive orthogonal bases that describe the image data for each digit. -

Recognition phase

2.1. Given an image of an unknown digit derive its raster vector, R.

2.2. Find the residuals of the approximations of R with each of the bases found in 1.4.

2.3. The digit with the minimal residual is the recognition result.

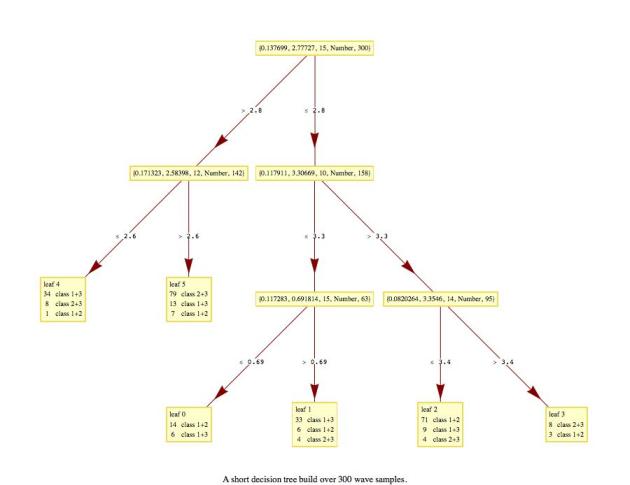

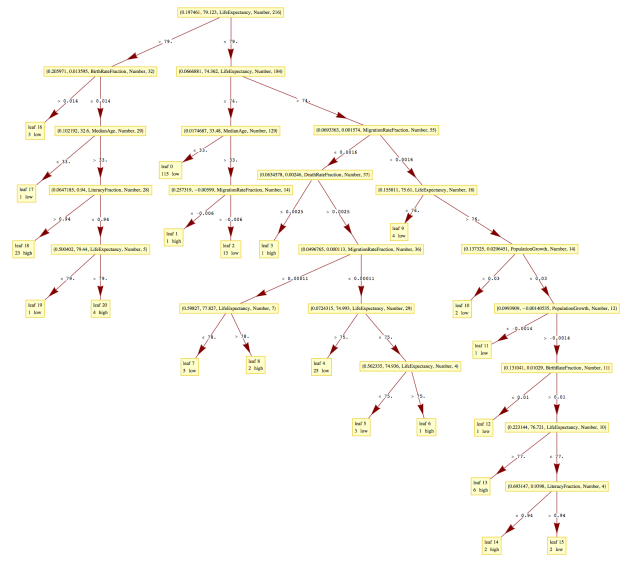

The algorithm is programmed very easily with Mathematica. I did some experiments using training and test digit drawings made with the iPad app Zen Brush. I applied both the SVD recognition algorithm described above and I also applied decision trees in the same way as described in the previous blog post.

Here is a table of the training images:

And here is table of the test images:

Note that the third row is with images drawn with a thinner brush, and the fourth row is with images drawn with a thicker brush.

Here are raster images of the top row of the test drawings:

Here are several plots showing raster vectors:

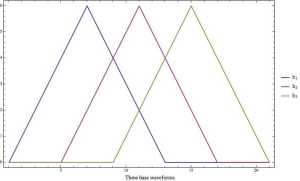

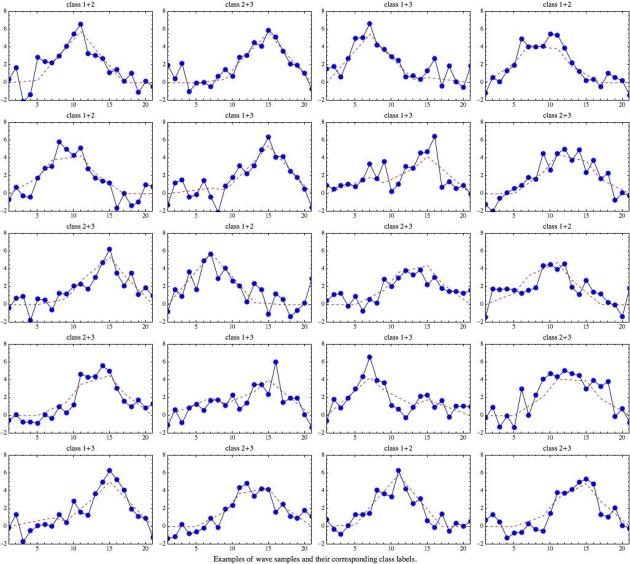

As I mentioned earlier, raster vectors are very similar to the wave samples described in the previous blog post, so we can apply decision trees to them.

The SVD algorithm misclassified only 3 images out of 36 test digit images, success ratio 92%. Here is a table with digit drawings and list plots of the residuals:

It is interesting to look at the residuals obtained for different recognition instances. For example, the plot on the first row and first column for the recognition of a drawing of “2” shows that the residual corresponding to 2 is the smallest and the residual for 8 is the next smallest one. The residual for 2 is the clear outlier. On the second row and third column we can see that a drawing of “4” has been classified correctly as 4, but the residual for 9 is very close to the residual for 4, we almost had a misclassification. We can see that for the other three test images with “4” the residuals for 4 are clearly separated from the rest, which can be explained with “4” being drawn more slanted, and its angle being more pronounced. Examining the misclassifications in similar way explains why they occurred.

Here are the misclassified images:

Note the misclassified image of 7 is quite different from the training images for 7.

The decision tree misclassified 42% of the images and here is are table of them:

Note that the decision trees would probably perform better if larger training data is used, not just nine drawings per digit. I also experimented with building the classifiers over the “negative” images and aligning the columns of the raster images instead of aligning the rows. The classification results were not better.

Some details about the image preprocessing follow.

As I said, I drew the images using the Zen Brush app. For each digit I drew nine instances on Zen Brush’ canvas and exported to an image — here is an example:

Then I used Mathematica‘s function ImagePartition to partition the image into 9 singe digit drawings, and then applied ImageCrop to all them. Of course the same procedure is done for the testing images.

Further developments

Further developments with the MNIST data set are described and discussed in the blog post “Handwritten digits recognition by matrix factorization” and the forum discussion “[Mathematica-vs-R] Handwritten digits recognition by matrix factorization”.