This blog post is to discuss the generation of Naive Bayesian Classifiers (NBC’s) and how they can be used to explain the correlations. For more theoretical details and the Mathematica code to generate the data tables and plot in this blog post see the document “Basic theory and construction of naive Bayesian classifiers” provided by the project Mathematica For Prediction at GitHub. (The code for NBC generation and classification is also provided by that project.)

We consider the following classification problem: from a given two dimensional array representing a list of observations and labels associated with them predict the label of new, unseen observation.

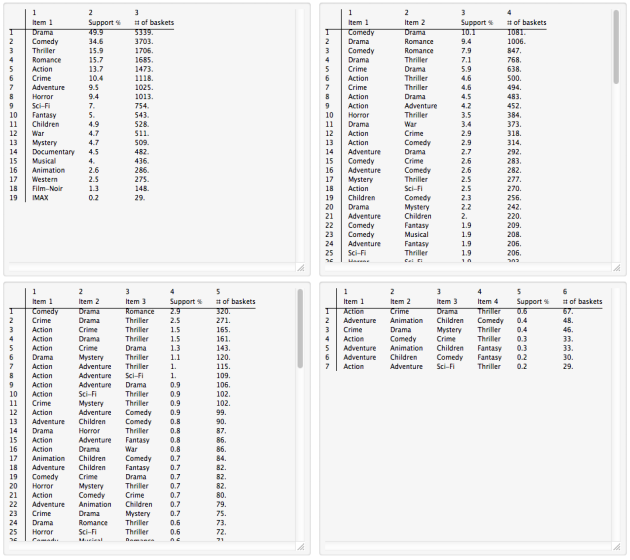

Consider for example this sample of a data array with country data:

We assume that have the following observed variables:

{“PopulationGrowth”, “LifeExpectancy”, “MedianAge”, “LiteracyFraction”, “BirthRateFraction”, “DeathRateFraction”, “MigrationRateFraction”}

The predicated variable is “GDB per capita”, the last column with the labels “high” and “low”.

Note that the values of the predicted variable “high” and “low” can be replaced with True and False respectively.

One way to solve this classification problem is to construct a NBC, and then apply that NBC to new, unknown records comprised by the observed variables.

Consider the following experiment:

1. Make a data array by querying CountryData for the observed variables

{“PopulationGrowth”, “LifeExpectancy”, “MedianAge”, “LiteracyFraction”, “BirthRateFraction”, “DeathRateFraction”, “MigrationRateFraction”, “Population”, “GDP”}

2. Replace the last two columns in the data array with a column of the labels “high” and “low”. The countries with GDP per capita greater than $30000 are labeled with “high”, the rest are labeled with “low”.

3. Split the data into a training and a testing sets.

3.1. For the training set randomly take 80% of the records with the label “high” and 80% of the records with the label “low”.

3.2. The rest of the records make the testing set.

4. Generate a NBC classifier using the training set.

5. Classify the records in the testing set with the classifier obtained at step 4.

6. Compare the actual labels with the predicted labels.

6.1. Make the comparison for all records,

6.2. only for the records labeled with “high”, and

6.3. only for the records labeled with “low”.

7. Repeat steps 3, 4, 5, and 6 if desired.

After the application of the NBC classifier implementations from the package NaiveBayesianClassifier.m we get the following predictions:

Here are the classification ratios (from step 6):

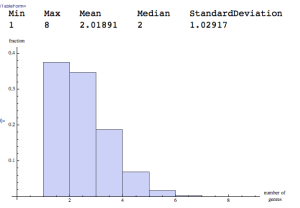

Here are the plots of the probability functions of classifier:

(Well, actually I skipped the last one for aesthetic purposes, see the document mentioned at the beginning of the blog post for all of the plots and theoretical details.)

If we imagine the Cartesian product of the probability plots we can get an idea of what kind of countries would have high GDP per capita. (I.e. more than $30000.)